Author: Rohit Agarwal, Fractal Analytics

Reposted from Fractal Analytics Blog Post of February 25, 2015

http://blog.fractalanalytics.com/advanced-analytics-2/scaling-analytics-through-knime/

Fortune 100 companies like Amazon and Google have been moving to institutionalize analytics across business processes – the results are for all to see. Achieving scale is a significant challenge to this process. Operationally, there are two ends to this spectrum. One: Scale through people – the focus here is on the analytics delivery team, the constraint being that the output will always and only be proportional to the inputs. At the other end: Scale through automation – this includes a black-box approach where every decision, business or analytical, is hard coded into the system, thus limiting the value of its outputs to the end-user. Either option fails to deliver on the economies of scale.

Advanced analytics platforms are used to build solutions from scratch and scale workspaces across and within teams. Of these, KNIME is an open source platform that has positioned itself among the “Leaders” in the Magic Quadrant for Advanced Analytic Platforms provided by Gartner, Inc.

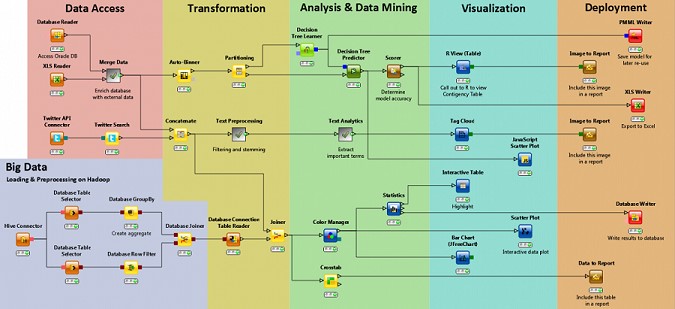

In this blog, I would like to talk about the key features of KNIME – where it is easy to assemble and visually organize end-to-end data analysis workflows, using components that perform atomic tasks on well-defined inputs producing well-defined outputs – that support the scaling of analytics.

1. Intelligent automation

KNIME has a workflow editor (view below) along with a plethora of ‘nodes’, each of which performs a specific task and can be stitched together to form a complete solution or a workflow. For example, if I want to create a typical data analysis flow, I would put together:

- data preprocessing

- transformations

- modeling

- post-processing of modeling results

- charting

and execute that workflow.

A KNIME workflow, though standardized, can incorporate customizations – e.g. classifying a continuous variable (e.g. retail footfalls) into discrete groups (e.g. frequent, moderate, occasional) to analyze distribution – ensuring that business logic is added to the modeler’s statistical acumen. Thereby achieving what we like to call – intelligent automation.

2. Visual workflow

KNIME has a graphical workflow editor and its model architecture complements this. Process or logic is defined by configuring the flow and is not specific to any language/technology. It allows us to manipulate, analyze, and model data in a very intuitive way through visual programming. Defining complete problem solution in a workflow gives the ability to understand it quickly with the benefit of making the solution concise, reusable, and structured.

3. The open perspective

"Data matures like wine, applications like fish" - James Governor, Founder of Redmonk.

KNIME has a unique counter to this decay; it’s an ‘Open Platform’. This is something that allows KNIME to open doors for flexibility and collaboration. It results in a continuously improved platform, which:

- incorporates changes with emerging technologies,

- adds enriched feature sets from a very active user community,

- integrates with other open source projects like R, Weka, etc.

For this reason, users have consistently given it the highest rating in ease of use for open source software. For scaled solutions, this brings a self-improving and stable technology. Thus KNIME supports scaled analytics via an ideal man-machine contribution, easy learning, and acceptance and an ever-improving platform.

References:

Magic Quadrant for Advanced Analytics Platforms

KNIME a 2014 Leader in Advanced Analytic Platform: Gartner Magic Quadrant

2013 Data Miner Survey